In our previous attempt, we applied some machine learning techniques to our data and predicted the values for target variable using AgeGroup, Sex, Pclass and Embarked attributes. Now, we will further explore other attributes and see how much information we can extract.

This time, instead of keeping test set apart, we will merge it into the training data set. This will enable us to collect complete range of values for each attribute, in case there are some missing outs in training set:

> dataset$Dataset <- 'train'

> testset$Dataset <- 'test'

> testset$Survived <- 0

> dataset <- rbind(dataset, testset[,c(1,13,2:12)])

This may look a strange way to merge two data sets, but here's some explanation. The first line adds a column Survived to testset, so that both the dataset and testset have identical columns. The next two lines add another column to identify whether a record is from training set or test set. The last line merges both the data sets using rbind (row bind) function. In the parameters, we defined column sequence of testset so that it is identical to dataset, because when we added Survived column to test set, it went at the end, while in training set, it was 2nd in order.

Let's resume our exploration; we start with Name. At first glance, the name appears to be useless, as it is unique for each passenger. But looking closely, we notice a few things: each name contains a Family name and a Title; FamilyName can be used to create relationships among passengers, while titles like Master, Capt, Sir, Lady tell us people's age, job and the social class they belong to.

We will partition the names into sub parts and extract Title and FamilyName. The following command uses sapply function, which basically applies a function to each element in the vector passed as argument. We pass the Name attribute and apply strsplit function that splits a string based on given criteria, which in our case is either , (comma) or . (period). The next line chops off extra white space.

> dataset$Title <- sapply(dataset$Name, FUN=function(x) {strsplit(as.character(x), split='[,.]')[[1]][2]})

> dataset$Title <- sub(' ', '', dataset$Title)

Let's have a look at the titles we have in the data.

> unique(dataset$Title)

These are 18 distinct titles, out of which, we will merge some to keep our model simple.

Mlle is for Mademoiselle in French and Mme is for Madame. Similarly, Dona is Spanish for Lady. Jonkheer and the Countess are again, titles for noble women. All of these can be merged into one name "Lady".

> dataset$Title[dataset$Title %in% c('Mlle', 'Mme', 'Ms', 'Dona', 'Lady', 'the Countess', 'Jonkheer')] <- 'Lady'

This cuts our distinct values to 13.

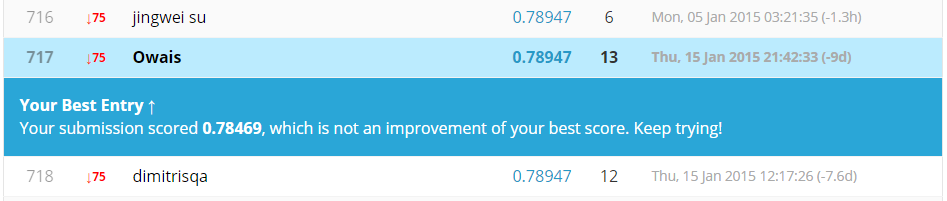

Submitting our predictions on new model with Titles added did not improve our score on Kaggle, but it didn't decrease it as well. So, we will keep this information intact.

We can further notice some Titles like Master, Miss and Ms, which can give us a clue about the age of the passenger. We can make use of these when filling in missing values of age.

Let's search for all passengers with missing age and Title "Master" and fill them with mean age of passengers with Master titles whose age is available. But first, we will reset the Age variable by reading both data sets in a temporary variable and combining to get original values of Age:

> temp.train <- read.csv("train.csv")

> temp.test <- read.csv("test.csv")

> temp.test$Survived <- 0

> tempset <- rbind(temp.train, temp.test[,c(1,12,2:11)])

> dataset$Age <- tempset$Age

> bad <- is.na(dataset$Age)

> dataset$Age[bad & dataset$Title == 'Master'] <- mean(dataset$Age[dataset$Title == 'Master'], na.rm=TRUE)

Fill the missing age for some other titles as well:

> dataset$Age[bad & dataset$Title == 'Miss'] <- mean(dataset$Age[dataset$Title == 'Miss'], na.rm=TRUE)

> dataset$Age[bad & dataset$Title == 'Mr'] <- mean(dataset$Age[dataset$Title == 'Mr'], na.rm=TRUE)

> dataset$Age[bad & dataset$Title == 'Mrs'] <- mean(dataset$Age[dataset$Title == 'Mrs'], na.rm=TRUE)

> dataset$AgePredicted <- 0

> dataset$AgePredicted[bad] <- 1

Fill out the remaining ones and round off before discretizing:

> bad <- is.na(dataset$Age)

> dataset$Age[bad] <- median(dataset$Age[bad], na.rm=TRUE)

> dataset$Age <- round(dataset$Age)

Discretize again as before:

> dataset$AgeGroup <- 'old'

> dataset$AgeGroup[dataset$Age < 2] <- 'infant'

> dataset$AgeGroup[dataset$Age >= 2 & dataset$Age < 13] <- 'child'

> dataset$AgeGroup[dataset$Age >= 13 & dataset$Age < 20] <- 'teenager'

> dataset$AgeGroup[dataset$Age >= 20 & dataset$Age < 40] <- 'young'

> dataset$AgeGroup[dataset$Age >= 40] <- 'old'

Time to see how we have improved in terms of accuracy. But we will not include Title yet in our formula:

This time, instead of keeping test set apart, we will merge it into the training data set. This will enable us to collect complete range of values for each attribute, in case there are some missing outs in training set:

> dataset$Dataset <- 'train'

> testset$Dataset <- 'test'

> testset$Survived <- 0

> dataset <- rbind(dataset, testset[,c(1,13,2:12)])

Let's resume our exploration; we start with Name. At first glance, the name appears to be useless, as it is unique for each passenger. But looking closely, we notice a few things: each name contains a Family name and a Title; FamilyName can be used to create relationships among passengers, while titles like Master, Capt, Sir, Lady tell us people's age, job and the social class they belong to.

We will partition the names into sub parts and extract Title and FamilyName. The following command uses sapply function, which basically applies a function to each element in the vector passed as argument. We pass the Name attribute and apply strsplit function that splits a string based on given criteria, which in our case is either , (comma) or . (period). The next line chops off extra white space.

> dataset$Title <- sapply(dataset$Name, FUN=function(x) {strsplit(as.character(x), split='[,.]')[[1]][2]})

> dataset$Title <- sub(' ', '', dataset$Title)

Let's have a look at the titles we have in the data.

> unique(dataset$Title)

Mlle is for Mademoiselle in French and Mme is for Madame. Similarly, Dona is Spanish for Lady. Jonkheer and the Countess are again, titles for noble women. All of these can be merged into one name "Lady".

> dataset$Title[dataset$Title %in% c('Mlle', 'Mme', 'Ms', 'Dona', 'Lady', 'the Countess', 'Jonkheer')] <- 'Lady'

Submitting our predictions on new model with Titles added did not improve our score on Kaggle, but it didn't decrease it as well. So, we will keep this information intact.

We can further notice some Titles like Master, Miss and Ms, which can give us a clue about the age of the passenger. We can make use of these when filling in missing values of age.

Let's search for all passengers with missing age and Title "Master" and fill them with mean age of passengers with Master titles whose age is available. But first, we will reset the Age variable by reading both data sets in a temporary variable and combining to get original values of Age:

> temp.train <- read.csv("train.csv")

> temp.test <- read.csv("test.csv")

> temp.test$Survived <- 0

> tempset <- rbind(temp.train, temp.test[,c(1,12,2:11)])

> dataset$Age <- tempset$Age

> bad <- is.na(dataset$Age)

> dataset$Age[bad & dataset$Title == 'Master'] <- mean(dataset$Age[dataset$Title == 'Master'], na.rm=TRUE)

Fill the missing age for some other titles as well:

> dataset$Age[bad & dataset$Title == 'Miss'] <- mean(dataset$Age[dataset$Title == 'Miss'], na.rm=TRUE)

> dataset$Age[bad & dataset$Title == 'Mr'] <- mean(dataset$Age[dataset$Title == 'Mr'], na.rm=TRUE)

> dataset$Age[bad & dataset$Title == 'Mrs'] <- mean(dataset$Age[dataset$Title == 'Mrs'], na.rm=TRUE)

> dataset$AgePredicted <- 0

> dataset$AgePredicted[bad] <- 1

Fill out the remaining ones and round off before discretizing:

> bad <- is.na(dataset$Age)

> dataset$Age[bad] <- median(dataset$Age[bad], na.rm=TRUE)

> dataset$Age <- round(dataset$Age)

Discretize again as before:

> dataset$AgeGroup <- 'old'

> dataset$AgeGroup[dataset$Age < 2] <- 'infant'

> dataset$AgeGroup[dataset$Age >= 2 & dataset$Age < 13] <- 'child'

> dataset$AgeGroup[dataset$Age >= 13 & dataset$Age < 20] <- 'teenager'

> dataset$AgeGroup[dataset$Age >= 20 & dataset$Age < 40] <- 'young'

> dataset$AgeGroup[dataset$Age >= 40] <- 'old'

> rpart_fit <- rpart(formula=Survived ~ Sex + AgeGroup + Pclass + Embarked, data=dataset[dataset$Dataset == 'train',], method="class")

> prp(rpart_fit, type=1, extra=100, box.col=c("pink", "palegreen3")[rpart_fit$frame$yval], cex=0.6)

> testset$Survived <- predict(rpart_fit, dataset[dataset$Dataset == 'test',], type="class")

> submit <- data.frame(PassengerId=testset$PassengerId, Survived=testset$Survived)

> write.csv(submit, file="rpart_relative_ages.csv", row.names=FALSE)

No improvement?! Well, how about adding some more variables. We already have Title, another can easily be added. The Cabin shows the cabin numbers that a passenger has booked, we can find out whether or not a passenger has booked cabins and introduce HasCabin as a new variable.

> dataset$HasCabin <- 1

> dataset$HasCabin[dataset$Cabin == ''] <- 0

We will also make use of SibSp and Parch variables too:

> formula <- Survived ~ Sex + AgeGroup + Pclass + Embarked + HasCabin + Title + SibSp + Parch

> rpart_fit <- rpart(formula, data=dataset[dataset$Dataset == 'train',], method="class")

> prp(rpart_fit, type=1, extra=100, box.col=c("pink", "palegreen3")[rpart_fit$frame$yval], cex=0.6)

> testset$Survived <- predict(rpart_fit, dataset[dataset$Dataset == 'test',], type="class")

> submit <- data.frame(PassengerId=testset$PassengerId, Survived=testset$Survived)

> write.csv(submit, file="rpart_family_attr.csv", row.names=FALSE)

Let's see how we do now with such a load of new information.

This is heartbreaking, I admit. But don't give up just yet. We have more variables to explore, only this time, we'll be a little aggressive ;-)

Have a look at Fare. It is a floating continuous value with only 1 missing record. We will do two things with this one:

1. Round off

2. Fill in missing value

3. Discretize

The first two steps are quick:

> dataset$Fare <- round(dataset$Fare)

> bad <- is.na(dataset$Fare)

> dataset$Fare[bad] <- median(dataset$Fare, na.rm=TRUE)

For the third step, we won't just discretize on judgement this time, but rather use a smart algorithm in "caret" library, k-means that creates clusters or groups of data by maximizing their distances. This article will give you a thorough description of what we are talking about here.

First, have a quick glance at how the data is distributed; this is to find out the ideal number of clusters we want to make.

> plot(table(dataset$Fare), ylim=c(0,75))

Do you notice some gaps? There's a very thin gap between 0 and next value and huge gap between 512 and a step before. Observing closely, we can visually see that there are at least 7 groups in the data. We can call k-means algorithm to create 7 clusters of the Fare variable.

> library(caret)

> kmeans(x=dataset$Fare, centers=7, iter.max=1000)$centers

[,1]

1 133.391304

2 28.755556

3 0.600000

4 68.291925

5 237.882353

6 9.943759

7 512.000000

What you see above is the virtual center points that the algorithm has created for these clusters. We will create a new variable FareGroup and assign every record a cluster number suggested by k-means:

> k <- kmeans(x=dataset$Fare, centers=7, iter.max=1000)

> dataset$FareGroup <- k$cluster

> table(dataset$FareGroup)

1 2 3 4 5 6 7

38 19 517 46 161 270 258

The k$cluster has a cluster number for each index - assigned to the FareGroup. The table shows how the records are distributed among the clusters. There's one extra tweak here, I noticed that none of the passengers who paid the amount below 6.0 GBP survived. We can create an additional cluster for such passengers as well.

dataset$FareGroup[dataset$Fare < 6] <- 0

Now, we have another refined variable to add to our rpart model. Let's give it another shot, but we'll go without Title to make sure that it's the FareGroup which increases the accuracy (if it did):

> formula <- Survived ~ Sex + AgeGroup + Pclass + Embarked + HasCabin + SibSp + Parch + FareGroup

> rpart_fit <- rpart(formula, data=dataset[dataset$Dataset == 'train',], method="class")

> prp(rpart_fit, type=1, extra=100, box.col=c("pink", "palegreen3")[rpart_fit$frame$yval], cex=0.6)

> testset$Survived <- predict(rpart_fit, dataset[dataset$Dataset == 'test',], type="class")

> submit <- data.frame(PassengerId=testset$PassengerId, Survived=testset$Survived)

> write.csv(submit, file="rpart_faregroup.csv", row.names=FALSE)

It WORKED! We are now at 628th position. But there's a long journey ahead. Next, we will push our limits on data preparation by learning the missing values. And also see how we can fine-tune recursive partition learning algorithm to fit our data better.

Comments

Post a Comment